Project Goals

We hope that the creation of this database, which we call HumanEva-I (The ``I'' is an acknowledgment that the current database has limitations and what we learn from this first database will most likely lead to improved database in the future), will advance the human motion and pose estimation community by providing a structured comprehensive development dataset with support code and quantitative evaluation metrics. The motivation behind design of the HumanEva-I dataset is that, as a research community, we need to answer the following questions:

- What is the state-of-the art in human pose estimation?

- What is the state-of-the art in human motion tracking?

- What algorithm design decisions effect the human pose estimation and tracking performance and to what extent?

- What are the strengths and weaknesses of different pose estimation and tracking algorithms?

- What are the main unsolved problems in human pose estimation and tracking?

In answering these questions, comparisons must be made across a variety of different methods and models to find which choices are most important for a practical and robust solution.

Data description

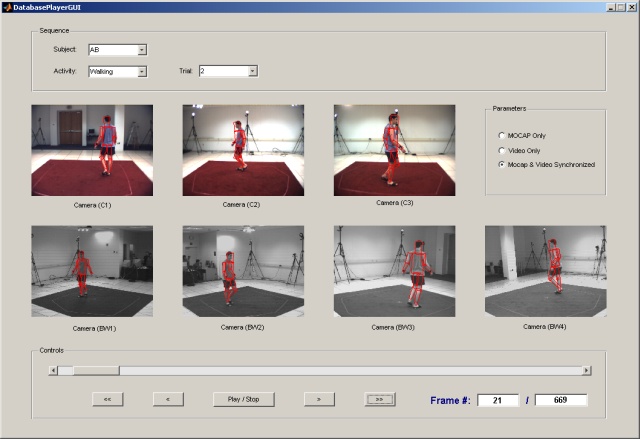

The HumanEva-I dataset contains 7 calibrated video sequences (4 grayscale and 3 color) that are synchronized with 3D body poses obtained from a motion capture system. The database contains 4 subjects performing a 6 common actions (e.g. walking, jogging, gesturing, etc.). The error metrics for computing error in 2D and 3D pose are provided to participants. The dataset contains training, validation and testing (with withheld ground truth) sets.

Example subjects

Example GUI

Acknowledgements

This project was supported in part by gifts from Honda Research Institute and Intel Corporation. We would like to thank Ming-Hsuan Yang, Rui Li, Alexandru Balan and Payman Yadollahpour for help in data collection and post-processing. We also would like to thank Stan Sclaroff for making the color video capture equipment available for this effort.